The Origins of Computers | Exploring the First Generation (1940–1956)

The history of modern computing begins with what is known as the First Generation of Computers, which spanned from 1940 to 1956. These early machines laid the foundation for the digital revolution, showcasing human ingenuity despite their size, complexity, and limitations by today’s standards.

What Are First Generation Computers?

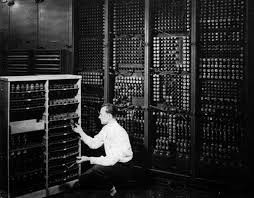

The first generation of computers refers to the earliest electronic computing devices, developed during and after World War II. These machines relied on vacuum tubes for processing and memory, making them bulky, power-hungry, and prone to overheating.

Despite these limitations, first-generation computers were groundbreaking, capable of performing calculations far faster than any mechanical calculator or human could at the time.

Key Characteristics

Here are some notable features of first-generation computers:

- Vacuum Tubes: Used for circuitry and magnetic drums for memory. Learn more in What is Hardware? | Input, Output, Processing, Storage.

- Size: Occupied entire rooms and required special cooling systems.

- Speed: Operated in milliseconds—slow by today’s standards but revolutionary back then.

- Programming Language: Machine language (binary code only).

- Input/Output: Relied on punched cards, paper tape, and printouts. See evolution in Types of Input Devices and Output Devices Explained.

Examples of First Generation Computers

ENIAC (Electronic Numerical Integrator and Computer)

- Developed in 1945 by John Mauchly and J. Presper Eckert.

- First general-purpose, programmable electronic computer.

- Contained over 17,000 vacuum tubes and weighed 30 tons.

- Used for artillery trajectory calculations by the U.S. Army.

UNIVAC I (Universal Automatic Computer I)

- Completed in 1951.

- First commercially available computer in the U.S.

- Used by government and business sectors, including the U.S. Census Bureau.

IBM 701

- Introduced in 1952.

- IBM’s first electronic computer.

- Used for scientific calculations and defense purposes.

How Did They Work?

First-generation computers processed instructions using machine language, composed of strings of binary digits (0s and 1s). Programmers had to manually wire or punch data into the system. Learn how hardware and software interact in How Hardware and Software Work Together.

They didn’t have operating systems like today. Everything was controlled manually, requiring teams of engineers and operators to run even basic programs.

Limitations

Despite their achievements, first-generation computers had several drawbacks:

- Enormous Size: Required large facilities and infrastructure.

- High Heat Output: Frequent vacuum tube failures due to overheating.

- Limited Storage: Very minimal memory and no long-term storage.

- Non-portable: Fixed installations with extremely low flexibility.

Advantages at the Time

- Faster Than Mechanical Devices: Reduced computational time drastically.

- Automation: Performed repetitive calculations without human input.

- Scientific and Military Use: Helped with war logistics, weather prediction, and atomic research.

Transition to Second Generation

By the mid-1950s, transistors began to replace vacuum tubes. This innovation marked the end of the first generation and the beginning of the second, ushering in smaller, faster, and more reliable computers. Dive deeper in Second Generation Computers (1956–1963).

Conclusion

The First Generation of Computers was a monumental step in the evolution of technology. Though primitive by modern standards, machines like the ENIAC and UNIVAC demonstrated the incredible potential of digital computing. These computers not only solved complex problems faster but also inspired generations of engineers, scientists, and innovators to continue advancing technology.